Photo Stacking in iOS with Vision and Metal

In this tutorial, you’ll use Metal and the Vision framework to remove moving objects from pictures in iOS. You’ll learn how to stack, align and process multiple images so that any moving object disappears. By Yono Mittlefehldt.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Photo Stacking in iOS with Vision and Metal

25 mins

Creating a Core Image Kernel

You’ll start with the actual kernel, which is written in MSL. MSL is very similar to C++.

Add a new Metal File to your project and name it AverageStacking.metal. Leave the template code in and add the following code to the end of the file:

#include <CoreImage/CoreImage.h>

extern "C" { namespace coreimage {

// 1

float4 avgStacking(sample_t currentStack, sample_t newImage, float stackCount) {

// 2

float4 avg = ((currentStack * stackCount) + newImage) / (stackCount + 1.0);

// 3

avg = float4(avg.rgb, 1);

// 4

return avg;

}

}}

With this code, you:

- Define a new function called

avgStacking, which will return an array of 4 float values, representing the pixel colors red, green and blue and an alpha channel. The function will be applied to two images at a time, so you need to keep track of the current average of all images seen. ThecurrentStackparameter represents this average, whilestackCountis a number indicating how images were used to create thecurrentStack. - Calculate the weighted average of the two images. Since

currentStackmay already include information from multiple images, you multiply it by thestackCountto give it the proper weight. - Add an alpha value to the average to make it completely opaque.

- Return the average pixel value.

sample_t data type is a pixel sample from an image.OK, now that you have a kernel function, you need to create a CIFilter to use it! Add a new Swift File to the project and name it AverageStackingFilter.swift. Remove the import statement and add the following:

import CoreImage

class AverageStackingFilter: CIFilter {

let kernel: CIBlendKernel

var inputCurrentStack: CIImage?

var inputNewImage: CIImage?

var inputStackCount = 1.0

}

Here you’re defining your new CIFilter class and some properties you need for it. Notice how the three input variables correspond to the three parameters in your kernel function. Coincidence? ;]

By this point, Xcode is probably complaining about this class missing an initializer. So, time to fix that. Add the following to the class:

override init() {

// 1

guard let url = Bundle.main.url(forResource: "default",

withExtension: "metallib") else {

fatalError("Check your build settings.")

}

do {

// 2

let data = try Data(contentsOf: url)

// 3

kernel = try CIBlendKernel(

functionName: "avgStacking",

fromMetalLibraryData: data)

} catch {

print(error.localizedDescription)

fatalError("Make sure the function names match")

}

// 4

super.init()

}

With this initializer, you:

- Get the URL for the compiled and linked Metal file.

- Read the contents of the file.

- Try to create a

CIBlendKernelfrom theavgStackingfunction in the Metal file and panic if it fails. - Call the super

init.

Wait just a minute… when did you compile and link your Metal file? Unfortunately, you haven’t yet. The good news, though, is you can have Xcode do it for you!

Compiling Your Kernel

To compile and link your Metal file, you need to add two flags to your Build Settings. So head on over there.

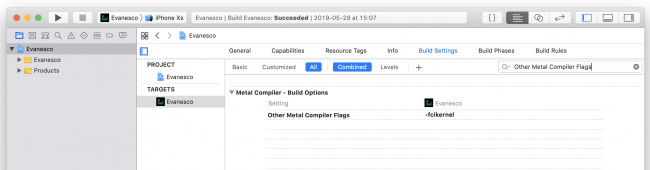

Search for Other Metal Compiler Flags and add -fcikernel to it:

Metal compiler flag

Metal compiler flag

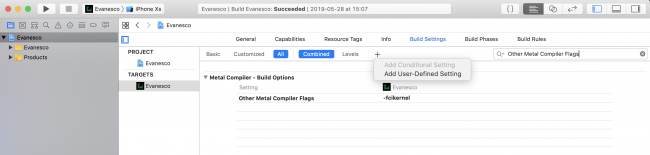

Next, click the + button and select Add User-Defined Setting:

Add user-defined setting

Add user-defined setting

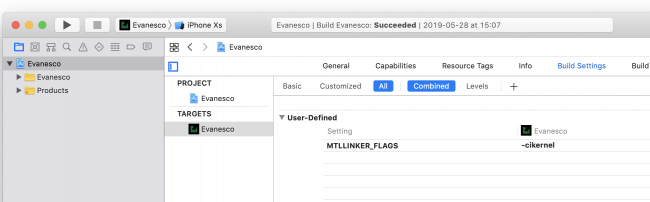

Call the setting MTLLINKER_FLAGS and set it to -cikernel:

Metal linker flag

Metal linker flag

Now, the next time you build your project, Xcode will compile your Metal files and link them in automatically.

Before you can do this, though, you still have a little bit of work to do on your Core Image filter.

Back in AverageStackingFilter.swift, add the following method:

func outputImage() -> CIImage? {

guard

let inputCurrentStack = inputCurrentStack,

let inputNewImage = inputNewImage

else {

return nil

}

return kernel.apply(

extent: inputCurrentStack.extent,

arguments: [inputCurrentStack, inputNewImage, inputStackCount])

}

This method is pretty important. Namely, it will apply your kernel function to the input images and return the output image! It would be a useless filter, if it didn’t do that.

Ugh, Xcode is still complaining! Fine. Add the following code to the class to calm it down:

required init?(coder aDecoder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

You don’t need to be able to initialize this Core Image filter from an unarchiver, so you’ll just implement the bare minimum to make Xcode happy.

Using Your Filter

Open ImageProcessor.swift and add the following method to ImageProcessor:

func combineFrames() {

// 1

var finalImage = alignedFrameBuffer.removeFirst()

// 2

let filter = AverageStackingFilter()

//3

for (i, image) in alignedFrameBuffer.enumerated() {

// 4

filter.inputCurrentStack = finalImage

filter.inputNewImage = image

filter.inputStackCount = Double(i + 1)

// 5

finalImage = filter.outputImage()!

}

// 6

cleanup(image: finalImage)

}

Here you:

- Initialize the final image with the first one in the aligned framer buffer and remove it in the process.

- Initialize your custom Core Image filter.

- Loop through each of the remaining images in the aligned frame buffer.

- Set up the filter parameters. Pay attention that the final image is set as the current stack images. It’s important to not swap the input images! The stack count is also set to the array index plus one. This is because you removed the first image from the aligned frame buffer at the beginning of the method.

- Overwrite the final image with the new filter output image.

- Call

cleanup(image:)with the final image after all images have been combined.

You may have noticed that cleanup() doesn’t take any parameters. Fix that by replacing cleanup() with the following:

func cleanup(image: CIImage) {

frameBuffer = []

alignedFrameBuffer = []

isProcessingFrames = false

if let completion = completion {

DispatchQueue.main.async {

completion(image)

}

}

completion = nil

}

The only changes are the newly added parameter and the if statement that calls the completion handler on the main thread. The rest remains as it was.

At the bottom of processFrames(completion:), replace the call to cleanup() with:

combineFrames()

This way, your image processor will combine all the captured frames after it aligns them and then pass on the final image to the completion function.

Phew! Build and run this app and make those people, cars, and anything that moves in your shot disappear!

And poof! The cars disappear!

And poof! The cars disappear!

For more fun, wave a wand and yell Evanesco! while you use the app. Other people will definitely not think you’re weird. :]