3.

The Rendering Pipeline

Written by Marius Horga & Caroline Begbie

Now that you know a bit more about 3D models and rendering, it’s time to take a drive through the rendering pipeline. In this chapter, you’ll create a Metal app that renders a red cube. As you work your way through this chapter, you’ll get a closer look at the hardware that’s responsible for turning your 3D objects into the gorgeous pixels you see onscreen.

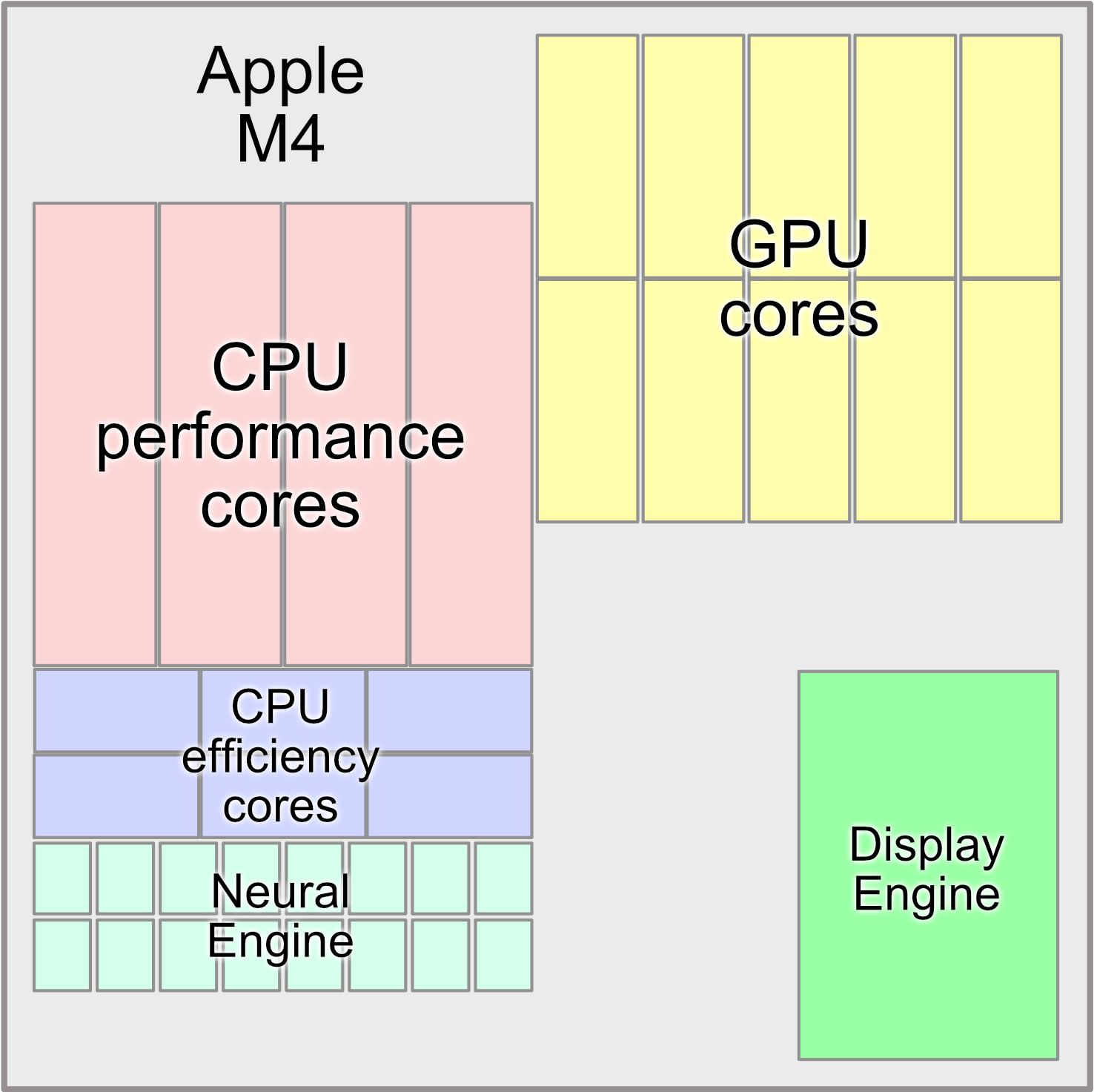

Unlike previous generations of hardware, where the GPU was on a discrete swappable board, Apple Silicon is a System on a Chip (SoC). This means that the Central Processing Unit (CPU) and Graphics Processing Unit (GPU), as well as the Neural Engine and memory are all on one single chip. Having all the components on one chip, brings many benefits. Each component is physically closer, which means that transfer of data is faster and more efficient.

Apple doesn’t share the designs of their chips, but they provide high level diagrams such as the following one:

M4 when first released in iPad Pro, had a ten-core CPU and a ten-core GPU. These might be the same numbers, but each component processes differently.

The GPU and CPU

The GPU is a specialized hardware component that can process images, videos and massive amounts of data really fast. This operation is known as throughput and is measured by the amount of data processed in a specific unit of time. The CPU, on the other hand, manages resources and is responsible for the computer’s operations. Although the CPU can’t process huge amounts of data like the GPU, it can process many sequential tasks (one after another) really fast. The time necessary to process a task is known as latency.

The ideal setup includes low latency and high throughput. Low latency allows for the serial execution of queued tasks, so the CPU can execute the commands without the system becoming slow or unresponsive — and high throughput lets the GPU render videos and games asynchronously without stalling the CPU. Because the GPU has a highly parallelized architecture specialized in doing the same task repeatedly and with little or no data transfers, it can process larger amounts of data.

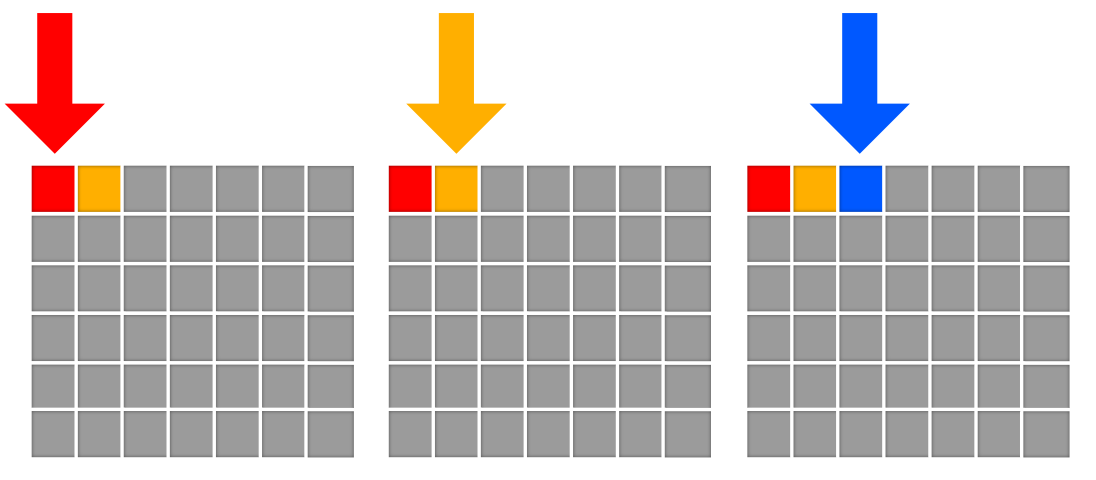

To visualize this, if you are placing colored pixels into a texture, the CPU would place them one at a time, sequentially:

The GPU would take longer to set up the placement, but would then perform the operation all at once in parallel:

You can see that if you are doing the same operation on many items, the GPU is well-suited to the task.

It’s time for you to walk through the graphics pipeline in a project. You’ll first set up the GPU commands and resources on the CPU, then discover how to handle the GPU.

The Metal Project

So far, you’ve been using Playgrounds to learn about Metal. Playgrounds are great for testing and learning new concepts, but it’s also important to understand how to set up a full Metal project using SwiftUI.

➤ In Xcode, create a new project using the Multiplatform App template.

➤ Name your project Pipeline, and fill out your team and organization identifier. Set the Testing System and Storage settings to None.

➤ Choose the location for your new project.

Excellent, you now have a fancy, new SwiftUI app. ContentView.swift is the main view for the app; this is where you’ll call your Metal view.

The MetalKit framework contains an MTKView, which is a special Metal rendering view. This is a UIView on iOS and an NSView on macOS. To interface with UIKit or Cocoa UI elements, you’ll use a Representable protocol that sits between SwiftUI and your MTKView.

This configuration is a bit complicated, so in the resources folder for this chapter, you’ll find a pre-made MetalView.swift.

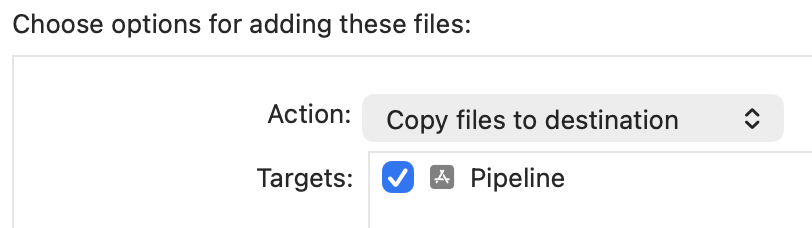

➤ Drag this file into your project, making sure that you choose to copy the file and add it to the app’s target.

➤ Open MetalView.swift.

MetalView is the View interface, hosting either the UIView or the NSView, depending on the operating system. When you create the view, MetalView instantiates a Renderer class. You’ll replace this Renderer with your own class shortly.

➤ Open ContentView.swift, and change the contents of body to:

VStack {

MetalView()

.border(Color.black, width: 2)

Text("Hello, Metal!")

}

.padding()

Here, you add MetalView to the view hierarchy and give it a border.

➤ Build and run your app, choosing My Mac as the destination.

Note: If you are not developing on Apple Silicon, you should still be able to follow along by running the app on a recent device.

You’ll see your hosted MTKView. The advantage of using SwiftUI is that it’s relatively easy to layer UI elements — such as the “Hello Metal” text here — below your Metal view. You can easily layer your app’s user interface over the MTKView.

You now have a choice. You can subclass MTKView and replace the MTKView in MetalView with the subclassed one. In this case, the subclass’s draw(_:) would get called every frame, and you’d put your drawing code in that method. However, in this book, you’ll set up a Renderer class that conforms to MTKViewDelegate and sets Renderer as a delegate of MTKView. MTKView calls a delegate method every frame, and this is where you’ll place the necessary drawing code.

Note: If you’re coming from a different API world, you might be looking for a game loop construct. You do have the option of using

CAMetalDisplayLinkfor more control over timing, but Apple introducedMetalKitwith its protocols to manage the game loop more easily.

The Renderer Class

➤ First, remove the three-line Renderer class from MetalView.swift. This will give a compile error until you create your own Renderer.

➤ Create a new Swift file named Renderer.swift, and replace its contents with the following code:

import MetalKit

class Renderer: NSObject {

init(metalView: MTKView) {

super.init()

}

}

extension Renderer: MTKViewDelegate {

func mtkView(

_ view: MTKView,

drawableSizeWillChange size: CGSize

) {

}

func draw(in view: MTKView) {

print("draw")

}

}

Here, you create an initializer and make Renderer conform to MTKViewDelegate with the two MTKView delegate methods:

-

mtkView(_:drawableSizeWillChange:): Called every time the size of the window changes. This allows you to update render texture sizes and camera projection. -

draw(in:): Called every frame. This is where you write your render code. Until you have something to render, use theprintstatement to verify that your app is callingdraw(in:)for every frame.

Initialization

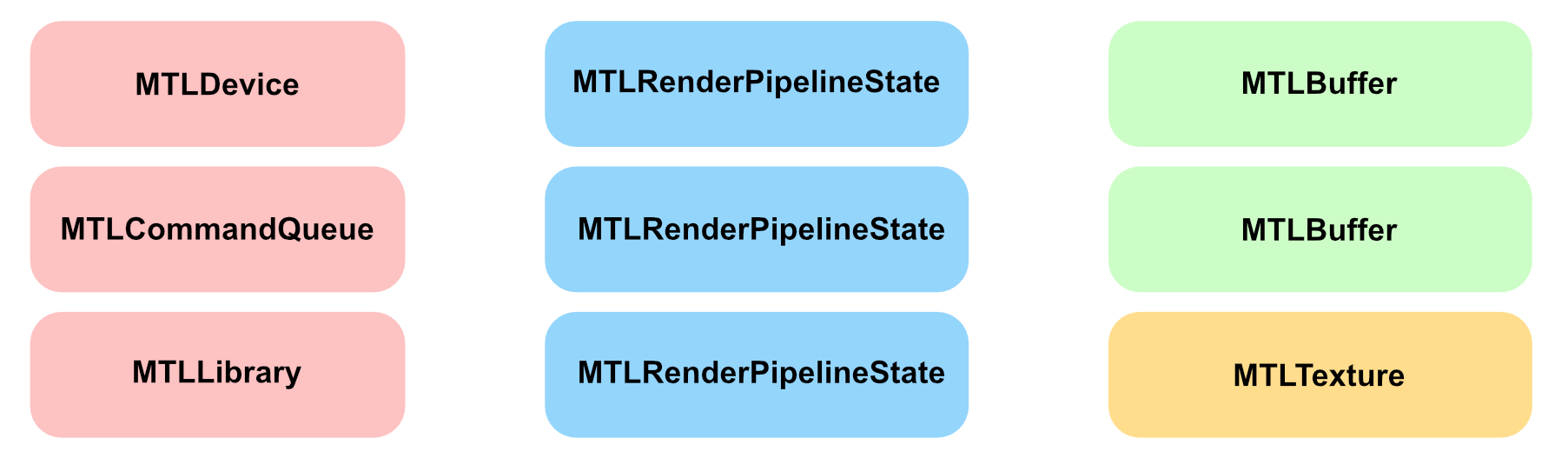

Just as you did in the first chapter, you need to set up the Metal environment. You should instantiate as many objects as you can up-front rather than create them during every frame. The following diagram indicates some of the Metal objects you can create at the start of the app.

-

MTLDevice: The software reference to the GPU hardware device. -

MTLCommandQueue: Responsible for creating and organizingMTLCommandBuffers every frame. -

MTLLibrary: Contains the source code from your vertex and fragment shader functions. -

MTLRenderPipelineState: Sets the information for the draw — such as which shader functions to use, what depth and color settings to use and how to read the vertex data. -

MTLBuffer: Holds data — such as vertex information — in a form that you can send to the GPU. -

MTLTexture: Holds image data in a specified pixel format.

Typically, you’ll have one MTLDevice, one MTLCommandQueue and one MTLLibrary object in your app. You’ll have several MTLRenderPipelineState objects that will define different ways of shading or doing compute operations, such as particle effects or ray tracing. You’ll have many MTLBuffers to hold vertex data and MTLTextures for texturing your models.

Before you can use these objects, you need to initialize them.

➤ Open Renderer.swift, and add these properties to Renderer:

static var device: MTLDevice!

static var commandQueue: MTLCommandQueue!

static var library: MTLLibrary!

var mesh: MTKMesh!

var vertexBuffer: MTLBuffer!

var pipelineState: MTLRenderPipelineState!

All of these properties are currently implicitly unwrapped optionals for convenience, but you can add error-checking later if you wish.

You’re using class properties for the device, the command queue and the library to ensure that only one of each exists. In rare cases, you may require more than one, but in most apps, one is enough.

➤ Still in Renderer.swift, add the following code to init(metalView:) before super.init():

guard

let device = MTLCreateSystemDefaultDevice(),

let commandQueue = device.makeCommandQueue() else {

fatalError("GPU not available")

}

Self.device = device

Self.commandQueue = commandQueue

metalView.device = device

This code initializes the GPU and creates the command queue.

➤ After super.init(), add this:

metalView.clearColor = MTLClearColor(

red: 1.0,

green: 1.0,

blue: 0.8,

alpha: 1.0)

metalView.delegate = self

This code sets metalView.clearColor to a cream color. It also sets Renderer as the delegate for metalView so the view will call the MTKViewDelegate drawing methods.

➤ Build and run the app to make sure everything’s set up and working. If everything is good, you’ll see the SwiftUI view as before, and in the debug console, you’ll see the word “draw” repeatedly.

Note: You won’t see

metalView’s cream color because you’re not asking the GPU to do any drawing yet.

Create the Mesh

You’ve already created a sphere and a cone using Model I/O; now it’s time to create a cube.

➤ In init(metalView:), before calling super.init(), add this:

// create the mesh

let allocator = MTKMeshBufferAllocator(device: device)

let size: Float = 0.8

let mdlMesh = MDLMesh(

boxWithExtent: [size, size, size],

segments: [1, 1, 1],

inwardNormals: false,

geometryType: .triangles,

allocator: allocator)

do {

mesh = try MTKMesh(mesh: mdlMesh, device: device)

} catch {

print(error.localizedDescription)

}

This code creates the cube mesh, as you did in the previous chapter.

➤ Then, set up the MTLBuffer that contains the vertex data you’ll send to the GPU.

vertexBuffer = mesh.vertexBuffers[0].buffer

This code puts the mesh data in an MTLBuffer. Next, you need to set up the pipeline state so that the GPU will know how to render the data.

Set Up the Metal Library

First, set up the MTLLibrary and ensure that the vertex and fragment shader functions are present.

➤ Continue adding code before super.init():

// create the shader function library

let library = device.makeDefaultLibrary()

Self.library = library

let vertexFunction = library?.makeFunction(name: "vertex_main")

let fragmentFunction =

library?.makeFunction(name: "fragment_main")

Here, you set up the default library with some shader function pointers. You’ll create these shader functions later in this chapter. Shader functions are compiled when you compile your project and the compiled result is stored in the library.

Create the Pipeline State

To configure the GPU’s state, you create a pipeline state object (PSO). This pipeline state can be a render pipeline state for rendering vertices, or a compute pipeline state for running a compute kernel.

➤ Continue adding code before super.init():

// create the pipeline state object

let pipelineDescriptor = MTLRenderPipelineDescriptor()

pipelineDescriptor.vertexFunction = vertexFunction

pipelineDescriptor.fragmentFunction = fragmentFunction

pipelineDescriptor.colorAttachments[0].pixelFormat =

metalView.colorPixelFormat

pipelineDescriptor.vertexDescriptor =

MTKMetalVertexDescriptorFromModelIO(mdlMesh.vertexDescriptor)

do {

pipelineState =

try device.makeRenderPipelineState(

descriptor: pipelineDescriptor)

} catch {

fatalError(error.localizedDescription)

}

The PSO holds a potential state for the GPU. The GPU needs to know its complete state before it can start managing vertices. Here, you set the two shader functions the GPU will call and the pixel format for the texture to which the GPU will write. You also set the pipeline’s vertex descriptor; this is how the GPU will know how to interpret the vertex data that you’ll present in the mesh data MTLBuffer.

Note: If you need to use a different data buffer layout or call different vertex or fragment functions, you’ll need additional pipeline states. Creating pipeline states is relatively time-consuming — which is why you do it up-front — but switching pipeline states during frames is fast and efficient.

The initialization is complete, and your project compiles. Next up, you’ll start on drawing your model.

Render Frames

MTKView calls draw(in:) for every frame; this is where you’ll set up your GPU render commands.

➤ In draw(in:), replace the print statement with this:

guard

let commandBuffer = Self.commandQueue.makeCommandBuffer(),

let descriptor = view.currentRenderPassDescriptor,

let renderEncoder =

commandBuffer.makeRenderCommandEncoder(

descriptor: descriptor) else {

return

}

You’ll send a series of commands to the GPU contained in command encoders. In one frame, you might have multiple command encoders, and the command buffer manages these.

You create a render command encoder using a render pass descriptor. This contains the render target textures that the GPU will draw into. In a complex app, you may well have multiple render passes in one frame, with multiple target textures. You’ll learn how to chain render passes together later.

➤ Continue adding this code:

// drawing code goes here

// 1

renderEncoder.endEncoding()

// 2

guard let drawable = view.currentDrawable else {

return

}

commandBuffer.present(drawable)

// 3

commandBuffer.commit()

Here’s a closer look at the code:

- After adding the GPU commands to a command encoder, you end its encoding.

- You present the view’s drawable texture to the GPU.

- When you commit the command buffer, you send the encoded commands to the GPU for execution.

Drawing

It’s time to set up the list of commands that the GPU will need to draw your frame. In other words, you’ll:

- Set the pipeline state to configure the GPU hardware.

- Give the GPU the vertex data.

- Issue a draw call using the mesh’s submesh groups.

➤ Still in draw(in:), replace the comment:

// drawing code goes here

With the following:

renderEncoder.setRenderPipelineState(pipelineState)

renderEncoder.setVertexBuffer(vertexBuffer, offset: 0, index: 0)

for submesh in mesh.submeshes {

renderEncoder.drawIndexedPrimitives(

type: .triangle,

indexCount: submesh.indexCount,

indexType: submesh.indexType,

indexBuffer: submesh.indexBuffer.buffer,

indexBufferOffset: submesh.indexBuffer.offset)

}

Great, you set up the GPU commands to set the pipeline state and the vertex buffer, and to perform the draw calls on the mesh’s submeshes. When you commit the command buffer at the end of draw(in:), you’re telling the GPU that the data and pipeline are ready, and it’s time for the GPU to take over.

The Render Pipeline

Are you ready to investigate the GPU pipeline? Great, let’s get to it!

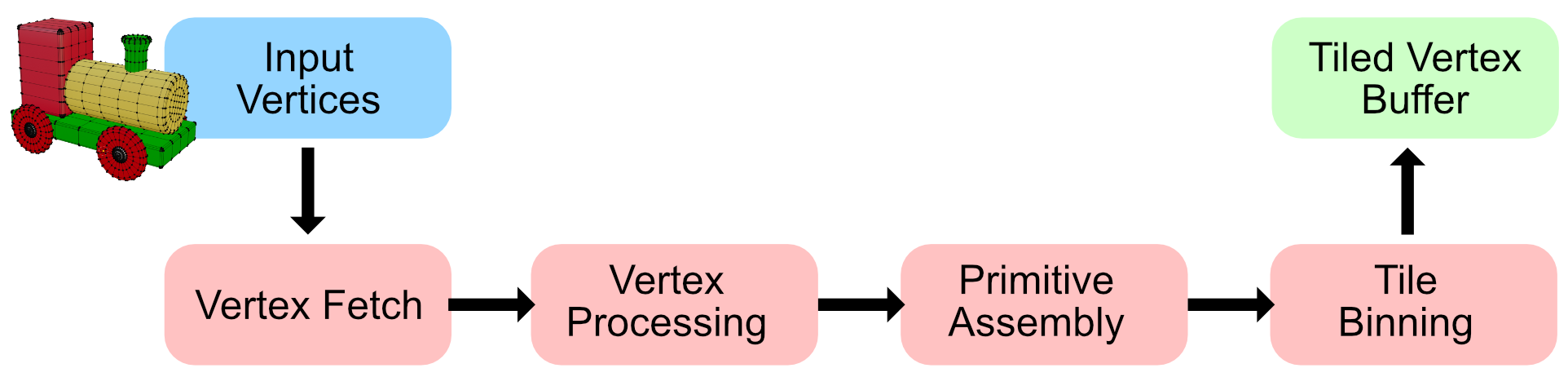

Apple Silicon’s Tile-Based Deferred Renderer (TBDR) GPU has two main rendering phases:

-

The Tiling phase. Here’s where the GPU splits the screen into tiles and finds out which tile should process each vertex.

-

The Rendering phase. The GPU will process all covered pixels during this phase.

By keeping these phases separate, the GPU can perform rendering at the same time as tiling, if GPU resources allow.

First, look at each of the processes in the tiling phase:

Tiling: Vertex Fetch

The name of this stage varies among different graphics Application Programming Interfaces (APIs). For example, DirectX calls it Input Assembler.

To start rendering 3D content, you first need a scene. A scene consists of models that have meshes of vertices.

As you saw in the previous chapter, you use a vertex descriptor to define the way vertices are read in along with their attributes — such as position, texture coordinates, normal and color.

When the GPU fetches the vertex buffer, the MTLRenderCommandEncoder draw call tells the GPU whether the buffer is indexed. If the buffer is not indexed, the GPU assumes the buffer is an array, and it reads in one element at a time, in order.

The GPU then sends the vertex data on to the Vertex Processing stage.

Tiling: Vertex Processing

In the Vertex Processing stage, vertices are processed individually. This is your opportunity to write code to calculate per-vertex lighting and color. More importantly, you can transform vertex coordinates through various coordinate spaces to reach their position in the final render.

Creating a Vertex Shader

It’s time to see vertex processing in action. The vertex shader you’re about to write is minimal, but it encapsulates most of the necessary vertex shader syntax you’ll need in this and subsequent chapters.

➤ Create a new file using the Metal File template, and name it Shaders.metal. Then, add this code at the end of the file:

// 1

struct VertexIn {

float4 position [[attribute(0)]];

};

// 2

vertex float4 vertex_main(const VertexIn vertexIn [[stage_in]]) {

return vertexIn.position;

}

Going through the code:

- Create a

VertexInstructure to describe the vertex attributes that match the vertex descriptor you set up earlier. In this case, justposition. - Implement a vertex shader,

vertex_main, that takes inVertexInstructures and returns vertex positions asfloat4types.

Remember in the current app, submeshes index the vertices in the vertex buffer. The vertex shader gets the current vertex index via the [[stage_in]] attribute and unpacks the VertexIn structure cached for the vertex at the current index.

To recap, the CPU sent the GPU a vertex buffer that you created from the model’s mesh. You configured the vertex buffer using a vertex descriptor that tells the GPU how the vertex data is structured. On the GPU, you created a structure to encapsulate the vertex attributes. The vertex shader takes in this structure as a function argument, and through the [[stage_in]] qualifier, acknowledges that position comes from the CPU via the [[attribute(0)]] position in the vertex buffer. The vertex shader then processes all of the vertices and returns their positions as a float4.

Note: When you use a vertex descriptor with attributes, you don’t have to match types. The

MTLBufferpositionis afloat3, whereasVertexIndefines the position as afloat4.

When the vertex shader has finished processing, the GPU then groups the vertices and passes them to the Primitive Assembly stage.

Tiling: Primitive Assembly

The previous stage sent processed vertices grouped into blocks of data to this stage. The important thing to keep in mind is that vertices belonging to the same geometrical shape (primitive) are always in the same block. That means that the one vertex of a point, or the two vertices of a line, or the three vertices of a triangle, will always be in the same block, hence a second block fetch isn’t necessary.

Along with vertices, the CPU also sends vertex connectivity information when it issues the draw call command, like this:

renderEncoder.drawIndexedPrimitives(

type: .triangle,

indexCount: submesh.indexCount,

indexType: submesh.indexType,

indexBuffer: submesh.indexBuffer.buffer,

indexBufferOffset: 0)

The first argument of the draw function contains the most important information about vertex connectivity. In this case, it tells the GPU that it should draw triangles from the vertex buffer it sent.

The Metal API provides five primitive types:

-

point: For each vertex, rasterize a point. You can specify the size of a point that has the attribute

[[point_size]]in the vertex shader. - line: For each pair of vertices, rasterize a line between them. If a vertex was already included in a line, it cannot be included again in other lines. The last vertex is ignored if there are an odd number of vertices.

- lineStrip: Same as a simple line, except that the line strip connects all adjacent vertices and forms a poly-line. Each vertex (except the first) is connected to the previous vertex.

- triangle: For every sequence of three vertices, rasterize a triangle. The last vertices are ignored if they cannot form another triangle.

- triangleStrip: Same as a simple triangle, except adjacent vertices can be connected to other triangles as well.

As you read in the previous chapter, the pipeline specifies the winding order of the vertices. If the winding order is counter-clockwise, and the triangle vertex order is counter-clockwise, the vertices are front-faced; otherwise, the vertices are back-faced and can be culled since you can’t see their color and lighting. Primitives are culled when they’re entirely occluded by other primitives.

However, if they’re only partially off-screen, they’ll be clipped.

For efficiency, you should set winding order and enable back-face culling in the render command encoder before drawing.

Tiling: Tile Binning

At this point, primitives that have made it through the culling and clipping process are binned into tiles and stored in on-chip tile memory, ready to move on to the rasterizer. This tile memory is an area of GPU memory not accessible by you. If it gets full during the draw call, it’s flushed and a partial render is done.

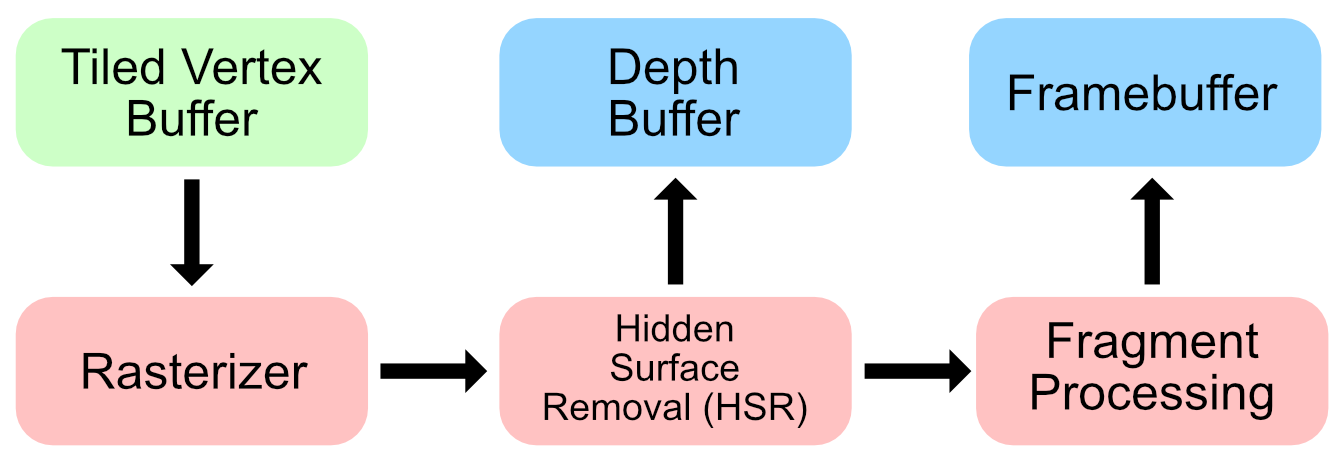

Each tile with its binned vertices now passes through the rendering phase:

Rendering: Rasterization

For each tile, taken from the Tiled Vertex Buffer, the rasterizer processes each primitive (usually triangles) in the scene, and works out which pixels should be rendered.

Rendering: Hidden Surface Removal

The rasterizer stores depth information, so that when a triangle fragment is in front of previous fragments, it keeps that fragment’s information and discards the others.

Traditional GPU architectures had to worry about overdraw, where pixels are rendered on top of one another.

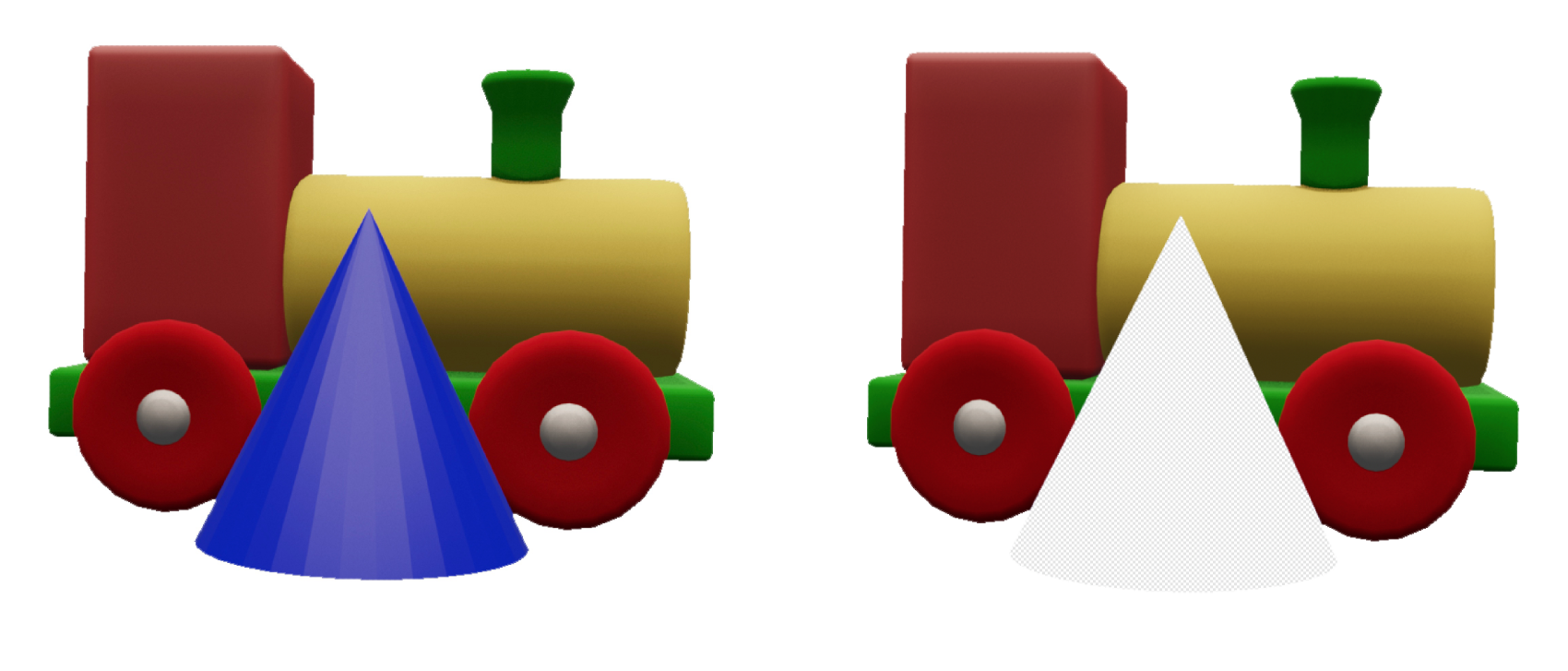

In the following image, a blue cone is rendered in front of the train. In traditional GPUs, if the train is rendered before the cone, then both the yellow train pixels and the blue cone pixels would be calculated.

With TBDR’s Hidden Surface Removal (HSR), the train’s pixels behind the cone won’t be calculated at all, no matter the order the objects are rendered in.

Note: Transparency and translucent objects are a little more complicated, but for now, you’ll just be rendering opaque objects.

Rendering: Fragment Processing

When the rasterizer and HSR processes have worked out the visible fragments, fragment processing can begin. Here’s where you decide the color of each fragment.

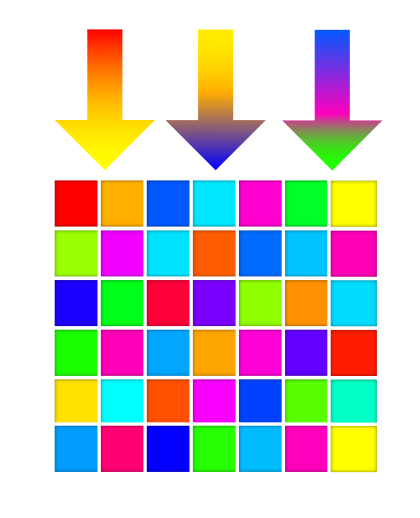

Like vertex processing, the fragment processing stage is programmable. You create a fragment shader function that will receive the lighting, texture coordinate, depth and color information that the vertex function outputs. The fragment shader output is a single color for that fragment. Each of these fragments will contribute to the color of the final pixel in the framebuffer. All of the attributes are interpolated for each fragment.

For example, to render this triangle, the vertex function would process three vertices with the colors red, green and blue. As the diagram shows, each fragment that makes up this triangle is interpolated from these three colors. Linear interpolation simply averages the color at each point on the line between two endpoints. If one endpoint has red color, and the other has green color, the midpoint on the line between them will be yellow. And so on.

The interpolation equation is parametric and has this form, where parameter p is the percentage (or a range from 0 to 1) of a color’s presence:

newColor = p * oldColor1 + (1 - p) * oldColor2

Color is easy to visualize, but the other vertex function outputs are also similarly interpolated for each fragment.

Note: If you don’t want a vertex output to be interpolated, add the attribute

[[flat]]to its definition.

Creating a Fragment Shader

➤ In Shaders.Metal, add the fragment function to the end of the file:

fragment float4 fragment_main() {

return float4(1, 0, 0, 1);

}

This is the simplest fragment function possible. You return the interpolated color red in the form of a float4, which describes a color in RGBA format. All the fragments that make up the cube will be red.

Rendering: Framebuffer

When fragments have been processed, Z (Depth) and Stencil Testing usually takes place. Fragments can be discarded if they fail the depth test, or are masked by a stencil buffer. An MTLDepthStencilState property on the render command encoder defines the rules for testing. You’ll create your first depth stencil state in Chapter 7, “The Fragment Function”.

Finally, the GPU blends the final colors and writes them to the render target, a special texture, also known as the framebuffer. The system then presents the framebuffer to the screen and refreshes the view’s pixel colors every frame. But it wouldn’t be very safe to write the color to the framebuffer while its being displayed on the screen.

A technique known as double-buffering is used to solve this situation. While the first buffer is being displayed on the screen, the second one is updated in the background. Then, the two buffers are swapped, and the second one is displayed on the screen while the first one is updated, and the cycle continues.

Whew! That was a lot of information to take in. However, the code you’ve written is what every Metal renderer uses, and despite just starting out, you should begin to recognize the rendering process when you look at Apple’s sample code.

➤ Build and run the app, and you’ll see a beautifully rendered red cube:

Notice how the cube is not square. Remember Metal uses Normalized Device Coordinates (NDC) that is -1 to 1 on the X axis. Resize your window, and the cube will maintain a size relative to the size of the window. In Chapter 6, “Coordinate Spaces”, you’ll learn how to position objects precisely on the screen.

What an incredible journey you’ve had through the rendering pipeline. In the next chapter, you’ll explore vertex and fragment shaders in greater detail.

Challenge

Using the train.usdz model in the resources folder for this project, replace the cube with this train. When importing the model, be sure to copy files to destination, and remember to add the model to the target.

Change the model’s vertical position in the vertex function using this code:

float4 position = vertexIn.position;

position.y -= 1.0;

return position;

Finally, color your train blue.

Refer to the previous chapter for asset loading and the vertex descriptor code if you need help. The finished code for this challenge is in the project challenge directory for this chapter.

Key Points

- CPUs are best for processing sequential tasks fast, whereas GPUs excel at processing small tasks synchronously.

- SwiftUI is a great host for

MTKViews, as you can layer UI elements easily. - Separate Metal tasks where you can to the initialize phase. Initialize the device, command queues, pipeline states and model data buffers once at the start of your app.

- Each frame, create a command buffer and one or more command encoders.

- GPU architecture requires a strict pipeline. Configure this using PSOs (pipeline state objects).

- There are two programmable stages in a simple rendering GPU pipeline. You calculate vertex positions using the vertex shader, and calculate the color that appears on the screen using the fragment shader.