Building an Action for Google Assistant: Getting Started

In this tutorial, you’ll learn how to create a conversational experience with Google Assistant. By Jenn Bailey.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Building an Action for Google Assistant: Getting Started

30 mins

- VUI and Conversational Design

- Designing an Action’s Conversation

- Getting Started

- Checking Permissions Settings

- Creating the Action Project

- Creating the Dialogflow Agent

- Agents and Intents

- Running your Action

- Modifying the Welcome Intent

- Testing Actions on a Device

- Uploading the Dialogflow Agent

- Fulfillment

- Setting up the Local Development Environment

- Installing and Configuring Google Cloud SDK

- Configuring the Project

- Opening the Project and Deploying to the App Engine

- Implementing the Webhook

- Viewing the Stackdriver Logs

- Creating and Fulfilling a Custom Intent

- Creating the Intent

- Fulfilling the Intent

- Detecting Surfaces

- Handling a Confirmation Event

- Using an Option List

- Entities and Parameters

- Entities

- Action and Parameters

- Using an Entity and a Parameter

- Where to Go From Here?

Viewing the Stackdriver Logs

To see the output of the println statement, view the stackdriver logs. You can view the logs from the simulator by expanding the menu at the top left of the simulator and selecting View Stackdriver Logs.

Then use the filtering mechanism to find your text.

Note: There can only be 15 versions of the app in production, each time you deploy the app to the app engine it creates a new version by default. You can expand the sidebar menu and under App Engine select Versions.

From here, all versions except the active version can be deleted if you’ve reached or exceeded 15.

Note: There can only be 15 versions of the app in production, each time you deploy the app to the app engine it creates a new version by default. You can expand the sidebar menu and under App Engine select Versions.

From here, all versions except the active version can be deleted if you’ve reached or exceeded 15.

Creating and Fulfilling a Custom Intent

Creating the Intent

In Dialogflow, go to Intents ▸ Create Intent or press the plus sign next to Intents. Enter the name play_the_latest_episode and add the Training Phrases shown below:

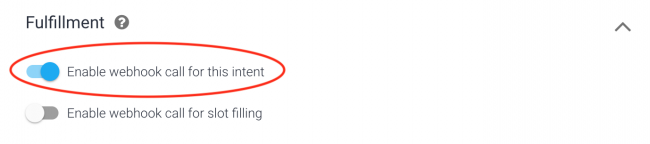

Enable webhook call for this intent under Fulfillment and Save:

Fulfilling the Intent

Add this intent to the in RWPodcastApp.kt below the welcome intent:

@ForIntent("play_the_latest_episode")

fun playLatestEpisode (request: ActionRequest): ActionResponse

{

val responseBuilder = getResponseBuilder(request)

val episode1 = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item).getJSONObject(0)

val mediaObjects = ArrayList<MediaObject>()

mediaObjects.add(

MediaObject()

.setName(episode1.getString("title"))

.setDescription(episode1.getString(summary))

.setContentUrl(episode1.getJSONObject(enclosure).getString(audioUrl))

.setIcon(

Image()

.setUrl(logoUrl)

.setAccessibilityText(requestBundle.getString("media_image_alt_text"))))

responseBuilder

.add(requestBundle.getString("latest_episode"))

.addSuggestions(suggestions)

.add(MediaResponse().setMediaObjects(mediaObjects).setMediaType("AUDIO"))

return responseBuilder.build()

}

What’s going on in the code above?

- The code creates a responseBuilder to return the response.

- The latest episode is parsed from the rss feed into

episode1 - The text string called

latest_episodeis fetched from resources_en_US.properties in the resources folder and added to the response. Strings can be stored in this location much like the strings.xml file in an Android App, making it easier to translate the Action later. - A

MediaObjectis created and populated with fields fromepisode1, including the description, an URL to an image and the link to the audio file. - Suggestion chips are created and added to the response to make it easy for the user to select a valid option.

- The response is built and returned.

Save RWPodcastApp.kt and deploy it to the App Engine. Once you have done this from the Gradle tab, you can then use the run button at the top of Android Studio to launch the command. Now, run the test action again on your phone or in the simulator. When the action welcomes you, respond with the words Latest episode. The latest episode of the podcast should play. Notice the Media player that displays.

Detecting Surfaces

You can detect what sort of device an action is initiated from. Replace the body of welcome in RWPodcastApp.k with the following:

xml = URL(rssUrl).readText()

jsonRSS = XML.toJSONObject(xml)

val responseBuilder = getResponseBuilder(request)

val episodes = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item)

if (!request.hasCapability(Capability.SCREEN_OUTPUT.value)) {

if (!request.hasCapability(Capability.MEDIA_RESPONSE_AUDIO.value)) {

// 1

responseBuilder.add(requestBundle.getString("msg_no_media"))

} else {

// 2

responseBuilder

.add(requestBundle.getString("conf_placeholder"))

.add(Confirmation()

.setConfirmationText(requestBundle.getString("conf_text")))

}

}

return responseBuilder.build()

Here are some things to notice about the code above:

- If the current conversation doesn’t have

actions.capability.SCREEN_OUTPUTandMEDIA_RESPONSE_AUDIO, the device doesn’t support media playback so the user is informed the episode cannot be played. -

If the device supports audio but has no screen, ask the user whether or not to play the latest episode. This is to make the action more convenient on a hands-free, screen-free surface that relies on voice control only.

Confirmationis a Helper that asks for a yes or no response.

Note: The play_latest_episode_confirmation Intent in Dialogflow has an actions_intent_confirmation Event. An Event is another way to trigger an intent with predefined values such as yes or no.

Note: The play_latest_episode_confirmation Intent in Dialogflow has an actions_intent_confirmation Event. An Event is another way to trigger an intent with predefined values such as yes or no.

Find the play_latest_episode_confirmation in the Dialogflow Intents list. Enable the webhook call for this intent and Save the intent.

Handling a Confirmation Event

Since you created a Confirmation above, you need to handle the response. Add the code shown below to RWPodcastApp.kt inside the play_latest_episode_confirmation intent:

val episode1 = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item).getJSONObject(0)

if(request.getUserConfirmation()) {

val mediaObjects = ArrayList<MediaObject>()

mediaObjects.add(

MediaObject()

.setName(episode1.getString(title))

.setDescription(getSummary(episode1))

.setContentUrl(episode1.getJSONObject(enclosure).getString(audioUrl))

.setIcon(Image().setUrl(logoUrl)

.setAccessibilityText(requestBundle.getString("media_image_alt_text"))))

responseBuilder

.add(requestBundle.getString("latest_episode"))

.addSuggestions(suggestions)

.add(MediaResponse().setMediaObjects(mediaObjects).setMediaType("AUDIO"))

return responseBuilder.build()

}

Here’s what’s happening above:

- If the

confirmationparameter is true, the user confirmed they want to play the podcast. You then fetch and play the episode the same way you do in the play_the_latest_episode intent. - If not, the user doesn’t want to play the podcast. Prompt the user to trigger other intents.

Save RWPodcastApp.kt, and in the terminal, and press run to deploy the changes to the appEngine. Open the test app in the Simulator and Talk to my Test App.

Select Speaker as the Surface and walk through the conversation to play the latest episode. You should also try walking through saying “no” when prompted:

Using an Option List

You can also set up an option list to give the user some options for what they can do. Add the else block below the outer if block in the welcome intent:

else {

val episodes = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item)

val items = ArrayList<ListSelectListItem>()

var item: ListSelectListItem

for (i in 0..9) {

item = ListSelectListItem()

item.setTitle(episodes.getJSONObject(i).getString(title))

.setDescription(getSummary(episodes.getJSONObject(i)))

.setImage(

Image()

.setUrl(logoUrl)

.setAccessibilityText(requestBundle.getString("list_image_alt_text")))

.optionInfo = OptionInfo().setKey((i).toString())

items.add(item)

}

responseBuilder

.add(requestBundle.getString("recent_episodes"))

.add(SelectionList().setItems(items))

.addSuggestions(suggestions).build()

The code above creates a list that displays the ten most recent podcasts. It then uses Suggestions to provide some suggestion chips.

get_episode_option, which you’ll implement next, handles the event when the user selects a podcast from the list. The user may also select one of the suggestion chips required to provide after a list.

Note: In Dialogflow, be sure to add actions_intent_option as an Intent and enable the web hook. Also, add the Google Assistant Option in the events category.

Note: In Dialogflow, be sure to add actions_intent_option as an Intent and enable the web hook. Also, add the Google Assistant Option in the events category.

Add the following code inside the get_episode_option intent before the return statement:

val selectedItem = request.getSelectedOption()

val option = selectedItem!!.toInt()

val episode = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item).getJSONObject(option)

val mediaObjects = ArrayList<MediaObject>()

mediaObjects.add(

MediaObject()

.setName(episode.getString(title))

.setDescription(episode.getString(summary))

.setContentUrl(episode.getJSONObject(enclosure).getString(audioUrl))

.setIcon(

Image()

.setUrl(logoUrl)

.setAccessibilityText(requestBundle.getString("media_image_alt_text"))))

responseBuilder

.add(episode.getString(title))

.addSuggestions(suggestions)

.add(MediaResponse().setMediaObjects(mediaObjects).setMediaType("AUDIO"))

In the code above, the value of the option parameter is checked so the MediaObject can be created with the selected episode based on the index. It then adds the title, media object and suggestion chips to responseBuilder and returns the built response.

Save and deploy the changes. Then, test the app on a phone to see the list.

When the user selects a podcast from the list, it plays. Nice, right?