OpenGL ES Particle System Tutorial: Part 1/3

Learn how to develop a particle system using OpenGL ES 2.0 and GLKit! This three-part tutorial covers point sprites, particle effects, and game integration. By Ricardo Rendon Cepeda.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

OpenGL ES Particle System Tutorial: Part 1/3

50 mins

- What’s a Particle System?

- What are Point Sprites?

- Getting Started

- Basic Drawing

- Designing Your Particle System

- Implementing Your Particle System

- Adding Vertex and Fragment Shaders

- Creating Shaders as GLSL Programs

- Building Obj-C Bridges

- Sending Shader Data to the GPU

- Adding Particle Shader Variances

- Animating Your Polar Rose

- Using Textures and Point Sprites

- Where To Go From Here?

In this three part OpenGL ES particle system tutorial series, you’ll learn how to make a cool and fun particle system from scratch, and integrate it into an iOS app!

Here’s how the series will be organized:

- Part 1: You are here! You’ll start by learning all about particle systems and point sprites, and create a small app to help you learn as you go.

- Part 2: In the next part, you’ll learn to create a generic particle-emitter paired system. That’s code word for “awesome and reusable”.

- Part 3: Finally, you’ll use your newly developed skills to integrate particle effects into a simple 2D game.

This tutorial series assumes you have some prior familiarity with OpenGL ES 2.0 and GLKit. If you are new to these, you should first read our Beginning OpenGL ES 2.0 with GLKit and How To Create A Simple 2D iPhone Game with OpenGL ES 2.0 and GLKit tutorial series.

Without further ado, let’s spawn some particles!

What’s a Particle System?

New to particle systems? Don’t worry — we’ve got you covered.

You can think of a particle system as having two levels:

- At the top you have the emitter which is the source, or generator, which controls the overall behaviour of the particles.

- At the bottom you have the particles themselves, a large collection of small objects which share very similar characteristics, but are all unique independent entities.

Precipitation is a great example of a particle system. At the top, you have a cloud which is the emitter in this system. Depending on weather conditions, the cloud may produce rain, hail, or snow, which would be the particles.

Each particle in the system has a different size, consistency, and starting position. Together, their properties form a particle system.

That should give you a basic understanding of particle systems, but there’s another concept to discuss as well — point sprites.

What are Point Sprites?

In computer graphics, a sprite is simply a stand-alone 2D image within a scene. For example, a single block in Tetris is a sprite, as well as a coin in Super Mario. If you’ve developed graphics applications before, you may be familiar with sprites as textured quads which are essentially a set of two triangles which form a rectangular area for a 2D texture.

A great example of a sprite is the little creature below, taken from the tutorial How To Create A Simple 2D iPhone Game with OpenGL ES 2.0 and GLKit:

Using this triangle-based implementation of textured quads requires at least 4 vertices per sprite. Particle systems routinely deal with hundreds of units — that means a LOT of vertices!

Thankfully, OpenGL ES 2.0 makes sprite rendering a lot easier with GL_POINTS. This command tells the GPU to draw every vertex as a square point, which reduces your 4-vertices-per-sprite problem to just 1!

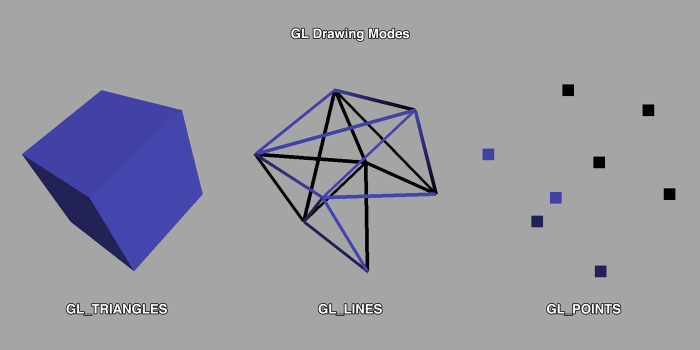

So while GL_TRIANGLES draws filled triangles and GL_LINES draws line segments, GL_PONTS is a completely different beast – it draws a single point (which you can map a texture to).

Now that you’re well-versed in particle system lingo and GL_POINTS, it’s time to get started building your first particle system.

Getting Started

Although Xcode comes with an OpenGL game template, the code mixes GLKBaseEffect with OpenGL ES 2.0, and is generally confusing and overwhelming.

Instead, you’ll start from scratch which will be a nearly painless process thanks to GLKit.

Open Xcode and go to File\New\Project…. Select iOS\Application\Empty Application, name your project GLParticles1, and choose iPhone for device family. Make sure Use Automatic Reference Counting is selected, click Next, choose a folder to save your project to, and click Create.

You want this app to run in portrait orientation only, so click on your GLParticles1 project in the Project Navigator and select GLParticles1 under TARGETS. In the Summary tab, under Supported Interface Orientations, make sure only the Portrait option is selected, as shown below:

As the tutorial title indicates, you’ll be using both OpenGL ES 2.0 and GLKit, so you need to add both frameworks to your project.

In the Project Navigator, click on your GLParticles1 project and select GLParticles1 under TARGETS. In the Build Phases tab, expand the Link Binary With Libraries section, click the + button, find OpenGLES.framework, and click Add, as shown in the screenshot below:

Repeat the steps above, but this time for GLKit.framework.

Now that you have the requisite frameworks in place, you’ll do some basic setup to color your screen green with OpenGL ES.

Basic Drawing

Go to File\New\File…, choose the iOS\User Interface\Storyboard template and name it MainStoryboard.storyboard. Open MainStoryboard.storyboard and drag a GLKit View Controller onto the storyboard. You can find GLKit View Controller in the Object Library in the lower right of the screen, as shown in the following image:

As this is your first and only view controller, Xcode will automatically set it up as the initial view controller.

This view controller will be governed by your custom code, so you’ll need a subclass.

Go to File\New\File… and choose the iOS\Cocoa Touch\Objective-C class subclass template. Enter MainViewController for the Class and GLKViewController for the subclass. Make sure Targeted for iPad and With XIB for user interface are unchecked, click Next, and click Create.

To remove the warning, open up MainViewController.h and add the following import:

#import <GLKit/GLKit.h>

Now open MainStoryboard.storyboard, select your GLKit View Controller, and find the Custom Class in the Identity Inspector. Set the Class to MainViewController, as shown below:

So far so good, but you still need to configure your project to use this storyboard.

In the Project Navigator, click on your GLParticles1 project and select GLParticles1 under TARGETS. In the Summary tab, find iPhone / iPod Deployment Info, and set the Main Storyboard to MainStoryboard.

Next, open AppDelegate.m and replace application:didFinishLaunchingWithOptions: with the following code:

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions

{

return YES;

}

This basically removes the boilerplate code that creates an empty window, since you’re now loading your user interface from the Storyboard instead.

You’re almost done – GLKit just requires just a tiny bit of setup code in order to work with your app.

Open MainViewController.m and replace its contents with the following code:

#import "MainViewController.h"

@implementation MainViewController

- (void)viewDidLoad

{

[super viewDidLoad];

// Set up context

EAGLContext* context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

[EAGLContext setCurrentContext:context];

// Set up view

GLKView* view = (GLKView*)self.view;

view.context = context;

}

#pragma mark - GLKViewDelegate

- (void)glkView:(GLKView *)view drawInRect:(CGRect)rect

{

// Set the background color (green)

glClearColor(0.30f, 0.74f, 0.20f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

}

@end

In this very simple GLKViewController implementation, an OpenGL ES 2.0 context is created and associated with the view. The code also implements glkView:drawInRect: to clear the screen to a green color.

Believe it or not, that’s all you need to do to implement GLKit in an app. See — told you it would be painless! :]

Build and run your app — you should see the stunning image below:

Admittedly, it’s just a green screen — but that tells you that GLKit is up and running, and ready for you to implement your particle system.